Science advanced dramatically during the 20th century. There were new and radical developments in the physical, life and human sciences, building on the progress made in the 19th century.[1]

The development of post-Newtonian theories in physics, such as special relativity, general relativity, and quantum mechanics led to the development of nuclear weapons. New models of the structure of the atom led to developments in theories of chemistry and the development of new materials such as nylon and plastics. Advances in biology led to large increases in food production, as well as the elimination of diseases such as polio.

A massive amount of new technologies were developed in the 20th century. Technologies such as electricity, the incandescent light bulb, the automobile and the phonography, first developed at the end of the 19th century, were perfected and universally deployed. The first airplane flight occurred in 1903, and by the end of the century large airplanes such as the Boeing 777 and Airbus A330 flew thousands of miles in a matter of hours. The development of the television and computers caused massive changes in the dissemination of information.

Astronomy and spaceflight

[edit]

- A much better understanding of the evolution of the universe was achieved, its age (about 13.8 billion years) was determined, and the Big Bang theory on its origin was proposed and generally accepted.

- The age of the Solar System, including Earth, was determined, and it turned out to be much older than believed earlier: more than 4 billion years, rather than the 20 million years suggested by Lord Kelvin in 1862.[2]

- The planets of the Solar System and their moons were closely observed via numerous space probes. Pluto was discovered in 1930 on the edge of the solar system, although in the early 21st century, it was reclassified as a dwarf planet (planetoid) instead of a planet proper, leaving eight planets.

- No trace of life was discovered on any of the other planets in the Solar System, although it remained undetermined whether some forms of primitive life might exist, or might have existed, somewhere. Extrasolar planets were observed for the first time.

- In 1969, Apollo 11 was launched towards the Moon and Neil Armstrong and Buzz Aldrin became the first persons from Earth to walk on another celestial body.

- That same year, Soviet astronomer Victor Safronov published his book Evolution of the protoplanetary cloud and formation of the Earth and the planets. In this book, almost all major problems of the planetary formation process were formulated and some of them solved. Safronov's ideas were further developed in the works of George Wetherill, who discovered runaway accretion.

- The Space Race between the United States and the Soviet Union gave a peaceful outlet to the political and military tensions of the Cold War, leading to the first human spaceflight with the Soviet Union's Vostok 1 mission in 1961, and man's first landing on another world—the Moon—with America's Apollo 11 mission in 1969. Later, the first space station was launched by the Soviet space program. The United States developed the first (and to date only) reusable spacecraft system with the Space Shuttle program, first launched in 1981. As the century ended, a permanent human presence in space was being founded with the ongoing construction of the International Space Station.

- In addition to human spaceflight, uncrewed space probes became a practical and relatively inexpensive form of exploration. The first orbiting space probe, Sputnik 1, was launched by the Soviet Union in 1957. Over time, a massive system of artificial satellites was placed into orbit around Earth. These satellites greatly advanced navigation, communications, military intelligence, geology, climate, and numerous other fields. Also, by the end of the 20th century, uncrewed probes had visited the Moon, Mercury, Venus, Mars, Jupiter, Saturn, Uranus, Neptune, and various asteroids and comets. The Hubble Space Telescope, launched in 1990, greatly expanded our understanding of the Universe and brought brilliant images to TV and computer screens around the world.

Biology and medicine

[edit]

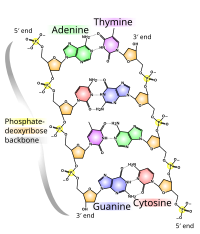

- Genetics was unanimously accepted and significantly developed. The structure of DNA was determined in 1953 by James Watson,[3][4] Francis Crick,[3][4] Rosalind Franklin[4] and Maurice Wilkins,[3][4] following by developing techniques which allow to read DNA sequences and culminating in starting the Human Genome Project (not finished in the 20th century) and cloning the first mammal in 1996.

- The role of sexual reproduction in evolution was understood, and bacterial conjugation was discovered.

- The convergence of various sciences for the formulation of the modern evolutionary synthesis (produced between 1936 and 1947), providing a widely accepted account of evolution.

- Placebo-controlled, randomized, blinded clinical trials became a powerful tool for testing new medicines.

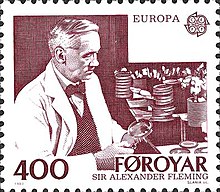

- Antibiotics drastically reduced mortality from bacterial diseases and their prevalence.

- A vaccine was developed for polio, ending a worldwide epidemic. Effective vaccines were also developed for a number of other serious infectious diseases, including influenza, diphtheria, pertussis (whooping cough), tetanus, measles, mumps, rubella (German measles), chickenpox, hepatitis A, and hepatitis B.

- Epidemiology and vaccination led to the eradication of the smallpox virus in humans.

- X-rays became powerful diagnostic tool for wide spectrum of diseases, from bone fractures to cancer. In the 1960s, computerized tomography was invented. Other important diagnostic tools developed were sonography and magnetic resonance imaging.

- Development of vitamins virtually eliminated scurvy and other vitamin-deficiency diseases from industrialized societies.

- New psychiatric drugs were developed. These include antipsychotics for treating hallucinations and delusions, and antidepressants for treating depression.

- The role of tobacco smoking in the causation of cancer and other diseases was proven during the 1950s (see British Doctors Study).

- New methods for cancer treatment, including chemotherapy, radiation therapy, and immunotherapy, were developed. As a result, cancer could often be cured or placed in remission.

- The development of blood typing and blood banking made blood transfusion safe and widely available.

- The invention and development of immunosuppressive drugs and tissue typing made organ and tissue transplantation a clinical reality.

- New methods for heart surgery were developed, including pacemakers and artificial hearts.

- Cocaine/crack and heroin were found to be dangerous addictive drugs, and their wide usage had been outlawed; mind-altering drugs such as LSD and MDMA were discovered and later outlawed. In many countries, a war on drugs caused prices to soar 10–20 times higher, leading to profitable black market drug dealing, and in some countries (e.g. the United States) to prison inmate sentences being 80% related to drug use by the 1990s.

- Contraceptive drugs were developed, which reduced population growth rates in industrialized countries, as well as decreased the taboo of premarital sex throughout many western countries.

- The development of medical insulin during the 1920s helped raise the life expectancy of diabetics to three times of what it had been earlier.

- Vaccines, hygiene and clean water improved health and decreased mortality rates, especially among infants and the young.

Notable diseases

[edit]- An influenza pandemic, Spanish Flu, killed anywhere from 20 to 100 million people between 1918 and 1919.

- A new viral disease, called the Human Immunodeficiency Virus, or HIV, arose in Africa and subsequently killed millions of people throughout the world. HIV leads to a syndrome called Acquired Immunodeficiency Syndrome, or AIDS. Treatments for HIV remained inaccessible to many people living with AIDS and HIV in developing countries, and a cure has yet to be discovered.

- Because of increased life spans, the prevalence of cancer, Alzheimer's disease, Parkinson's disease, and other diseases of old age increased slightly.

- Sedentary lifestyles, due to labour-saving devices and technology, along with the increase in home entertainment and technology such as television, video games, and the internet contributed to an "epidemic" of obesity, at first in the rich countries, but by the end of the 20th century spreading to the developing world.

Chemistry

[edit]

In 1903, Mikhail Tsvet invented chromatography, an important analytic technique. In 1904, Hantaro Nagaoka proposed an early nuclear model of the atom, where electrons orbit a dense massive nucleus. In 1905, Fritz Haber and Carl Bosch developed the Haber process for making ammonia, a milestone in industrial chemistry with deep consequences in agriculture. The Haber process, or Haber-Bosch process, combined nitrogen and hydrogen to form ammonia in industrial quantities for production of fertilizer and munitions. The food production for half the world's current population depends on this method for producing fertilizer. Haber, along with Max Born, proposed the Born–Haber cycle as a method for evaluating the lattice energy of an ionic solid. Haber has also been described as the "father of chemical warfare" for his work developing and deploying chlorine and other poisonous gases during World War I.

In 1905, Albert Einstein explained Brownian motion in a way that definitively proved atomic theory. Leo Baekeland invented bakelite, one of the first commercially successful plastics. In 1909, American physicist Robert Andrews Millikan – who had studied in Europe under Walther Nernst and Max Planck – measured the charge of individual electrons with unprecedented accuracy through the oil drop experiment, in which he measured the electric charges on tiny falling water (and later oil) droplets. His study established that any particular droplet's electrical charge is a multiple of a definite, fundamental value – the electron's charge – and thus a confirmation that all electrons have the same charge and mass. Beginning in 1912, he spent several years investigating and finally proving Albert Einstein's proposed linear relationship between energy and frequency, and providing the first direct photoelectric support for the Planck constant. In 1923 Millikan was awarded the Nobel Prize for Physics.

In 1909, S. P. L. Sørensen invented the pH concept and develops methods for measuring acidity. In 1911, Antonius Van den Broek proposed the idea that the elements on the periodic table are more properly organized by positive nuclear charge rather than atomic weight. In 1911, the first Solvay Conference was held in Brussels, bringing together most of the most prominent scientists of the day. In 1912, William Henry Bragg and William Lawrence Bragg proposed Bragg's law and established the field of X-ray crystallography, an important tool for elucidating the crystal structure of substances. In 1912, Peter Debye develops the concept of molecular dipole to describe asymmetric charge distribution in some molecules.

In 1913, Niels Bohr, a Danish physicist, introduced the concepts of quantum mechanics to atomic structure by proposing what is now known as the Bohr model of the atom, where electrons exist only in strictly defined circular orbits around the nucleus similar to rungs on a ladder. The Bohr Model is a planetary model in which the negatively charged electrons orbit a small, positively charged nucleus similar to the planets orbiting the Sun (except that the orbits are not planar) – the gravitational force of the solar system is mathematically akin to the attractive Coulomb (electrical) force between the positively charged nucleus and the negatively charged electrons.

In 1913, Henry Moseley, working from Van den Broek's earlier idea, introduces concept of atomic number to fix inadequacies of Mendeleev's periodic table, which had been based on atomic weight. The peak of Frederick Soddy's career in radiochemistry was in 1913 with his formulation of the concept of isotopes, which stated that certain elements exist in two or more forms which have different atomic weights but which are indistinguishable chemically. He is remembered for proving the existence of isotopes of certain radioactive elements, and is also credited, along with others, with the discovery of the element protactinium in 1917. In 1913, J. J. Thomson expanded on the work of Wien by showing that charged subatomic particles can be separated by their mass-to-charge ratio, a technique known as mass spectrometry.

In 1916, Gilbert N. Lewis published his seminal article "The Atom of the Molecule", which suggested that a chemical bond is a pair of electrons shared by two atoms. Lewis's model equated the classical chemical bond with the sharing of a pair of electrons between the two bonded atoms. Lewis introduced the "electron dot diagrams" in this paper to symbolize the electronic structures of atoms and molecules. Now known as Lewis structures, they are discussed in virtually every introductory chemistry book. Lewis in 1923 developed the electron pair theory of acids and base: Lewis redefined an acid as any atom or molecule with an incomplete octet that was thus capable of accepting electrons from another atom; bases were, of course, electron donors. His theory is known as the concept of Lewis acids and bases. In 1923, G. N. Lewis and Merle Randall published Thermodynamics and the Free Energy of Chemical Substances, first modern treatise on chemical thermodynamics.

The 1920s saw a rapid adoption and application of Lewis's model of the electron-pair bond in the fields of organic and coordination chemistry. In organic chemistry, this was primarily due to the efforts of the British chemists Arthur Lapworth, Robert Robinson, Thomas Lowry, and Christopher Ingold; while in coordination chemistry, Lewis's bonding model was promoted through the efforts of the American chemist Maurice Huggins and the British chemist Nevil Sidgwick.

Quantum chemistry

[edit]Some view the birth of quantum chemistry in the discovery of the Schrödinger equation and its application to the hydrogen atom in 1926.[citation needed] However, the 1927 article of Walter Heitler and Fritz London[5] is often recognised as the first milestone in the history of quantum chemistry. This is the first application of quantum mechanics to the diatomic hydrogen molecule, and thus to the phenomenon of the chemical bond. In the following years much progress was accomplished by Edward Teller, Robert S. Mulliken, Max Born, J. Robert Oppenheimer, Linus Pauling, Erich Hückel, Douglas Hartree, Vladimir Aleksandrovich Fock, to cite a few.[citation needed]

Still, skepticism remained as to the general power of quantum mechanics applied to complex chemical systems.[citation needed] The situation around 1930 is described by Paul Dirac:[6]

The underlying physical laws necessary for the mathematical theory of a large part of physics and the whole of chemistry are thus completely known, and the difficulty is only that the exact application of these laws leads to equations much too complicated to be soluble. It therefore becomes desirable that approximate practical methods of applying quantum mechanics should be developed, which can lead to an explanation of the main features of complex atomic systems without too much computation.

Hence the quantum mechanical methods developed in the 1930s and 1940s are often referred to as theoretical molecular or atomic physics to underline the fact that they were more the application of quantum mechanics to chemistry and spectroscopy than answers to chemically relevant questions. In 1951, a milestone article in quantum chemistry is the seminal paper of Clemens C. J. Roothaan on Roothaan equations.[7] It opened the avenue to the solution of the self-consistent field equations for small molecules like hydrogen or nitrogen. Those computations were performed with the help of tables of integrals which were computed on the most advanced computers of the time.[citation needed]

In the 1940s many physicists turned from molecular or atomic physics to nuclear physics (like J. Robert Oppenheimer or Edward Teller). Glenn T. Seaborg was an American nuclear chemist best known for his work on isolating and identifying transuranium elements (those heavier than uranium). He shared the 1951 Nobel Prize for Chemistry with Edwin Mattison McMillan for their independent discoveries of transuranium elements. Seaborgium was named in his honour, making him one of three people, along Albert Einstein and Yuri Oganessian, for whom a chemical element was named during his lifetime.

Molecular biology and biochemistry

[edit]By the mid 20th century, in principle, the integration of physics and chemistry was extensive, with chemical properties explained as the result of the electronic structure of the atom; Linus Pauling's book on The Nature of the Chemical Bond used the principles of quantum mechanics to deduce bond angles in ever-more complicated molecules. However, though some principles deduced from quantum mechanics were able to predict qualitatively some chemical features for biologically relevant molecules, they were, till the end of the 20th century, more a collection of rules, observations, and recipes than rigorous ab initio quantitative methods.[citation needed]

This heuristic approach triumphed in 1953 when James Watson and Francis Crick deduced the double helical structure of DNA by constructing models constrained by and informed by the knowledge of the chemistry of the constituent parts and the X-ray diffraction patterns obtained by Rosalind Franklin.[8] This discovery lead to an explosion of research into the biochemistry of life.

In the same year, the Miller–Urey experiment, conducted by Stanley Miller and Harold Urey demonstrated that basic constituents of protein, simple amino acids, could themselves be built up from simpler molecules in a simulation of primordial processes on Earth. Though many questions remain about the true nature of the origin of life, this was the first attempt by chemists to study hypothetical processes in the laboratory under controlled conditions.[9]

In 1983 Kary Mullis devised a method for the in-vitro amplification of DNA, known as the polymerase chain reaction (PCR), which revolutionized the chemical processes used in the laboratory to manipulate it. PCR could be used to synthesize specific pieces of DNA and made possible the sequencing of DNA of organisms, which culminated in the huge human genome project.

An important piece in the double helix puzzle was solved by one of Pauling's students Matthew Meselson and Frank Stahl, the result of their collaboration (Meselson–Stahl experiment) has been called as "the most beautiful experiment in biology".

They used a centrifugation technique that sorted molecules according to differences in weight. Because nitrogen atoms are a component of DNA, they were labelled and therefore tracked in replication in bacteria.

Late 20th century

[edit]

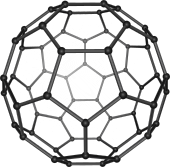

In 1970, John Pople developed the Gaussian program greatly easing computational chemistry calculations.[10] In 1971, Yves Chauvin offered an explanation of the reaction mechanism of olefin metathesis reactions.[11] In 1975, Karl Barry Sharpless and his group discovered a stereoselective oxidation reactions including Sharpless epoxidation,[12][13] Sharpless asymmetric dihydroxylation,[14][15][16] and Sharpless oxyamination.[17][18][19] In 1985, Harold Kroto, Robert Curl and Richard Smalley discovered fullerenes, a class of large carbon molecules superficially resembling the geodesic dome designed by architect R. Buckminster Fuller.[20] In 1991, Sumio Iijima used electron microscopy to discover a type of cylindrical fullerene known as a carbon nanotube, though earlier work had been done in the field as early as 1951. This material is an important component in the field of nanotechnology.[21] In 1994, Robert A. Holton and his group achieved the first total synthesis of Taxol.[22][23][24] In 1995, Eric Cornell and Carl Wieman produced the first Bose–Einstein condensate, a substance that displays quantum mechanical properties on the macroscopic scale.[25]

Earth science

[edit]In 1912 Alfred Wegener proposed the theory of Continental Drift.[26] This theory suggests that the shapes of continents and matching coastline geology between some continents indicates they were joined in the past and formed a single landmass known as Pangaea; thereafter they separated and drifted like rafts over the ocean floor, currently reaching their present position. Additionally, the theory of continental drift offered a possible explanation as to the formation of mountains; Plate Tectonics built on the theory of continental drift.

Unfortunately, Wegener provided no convincing mechanism for this drift, and his ideas were not generally accepted during his lifetime. Arthur Homes accepted Wegener's theory and provided a mechanism: mantle convection, to cause the continents to move.[27] However, it was not until after the Second World War that new evidence started to accumulate that supported continental drift. There followed a period of 20 extremely exciting years where the Theory of Continental Drift developed from being believed by a few to being the cornerstone of modern Geology. Beginning in 1947 research found new evidence about the ocean floor, and in 1960 Bruce C. Heezen published the concept of mid-ocean ridges. Soon after this, Robert S. Dietz and Harry H. Hess proposed that the oceanic crust forms as the seafloor spreads apart along mid-ocean ridges in seafloor spreading.[28] This was seen as confirmation of mantle convection and so the major stumbling block to the theory was removed. Geophysical evidence suggested lateral motion of continents and that oceanic crust is younger than continental crust. This geophysical evidence also spurred the hypothesis of paleomagnetism, the record of the orientation of the Earth's magnetic field recorded in magnetic minerals. British geophysicist S. K. Runcorn suggested the concept of paleomagnetism from his finding that the continents had moved relative to the Earth's magnetic poles. Tuzo Wilson, who was a promoter of the sea floor spreading hypothesis and continental drift from the very beginning,[29] added the concept of transform faults to the model, completing the classes of fault types necessary to make the mobility of the plates on the globe function.[30] A symposium on continental drift[31] was held at the Royal Society of London in 1965 must be regarded as the official start of the acceptance of plate tectonics by the scientific community. The abstracts from the symposium are issued as Blacket, Bullard, Runcorn;1965.In this symposium, Edward Bullard and co-workers showed with a computer calculation how the continents along both sides of the Atlantic would best fit to close the ocean, which became known as the famous "Bullard's Fit". By the late 1960s the weight of the evidence available saw Continental Drift as the generally accepted theory.

Other theories of the causes of climate change fared no better. The principal advances were in observational paleoclimatology, as scientists in various fields of geology worked out methods to reveal ancient climates. Wilmot H. Bradley found that annual varves of clay laid down in lake beds showed climate cycles. Andrew Ellicott Douglass saw strong indications of climate change in tree rings. Noting that the rings were thinner in dry years, he reported climate effects from solar variations, particularly in connection with the 17th-century dearth of sunspots (the Maunder Minimum) noticed previously by William Herschel and others. Other scientists, however, found good reason to doubt that tree rings could reveal anything beyond random regional variations. The value of tree rings for climate study was not solidly established until the 1960s.[32][33]

Through the 1930s the most persistent advocate of a solar-climate connection was astrophysicist Charles Greeley Abbot. By the early 1920s, he had concluded that the solar "constant" was misnamed: his observations showed large variations, which he connected with sunspots passing across the face of the Sun. He and a few others pursued the topic into the 1960s, convinced that sunspot variations were a main cause of climate change. Other scientists were skeptical.[32][33] Nevertheless, attempts to connect the solar cycle with climate cycles were popular in the 1920s and 1930s. Respected scientists announced correlations that they insisted were reliable enough to make predictions. Sooner or later, every prediction failed, and the subject fell into disrepute.[34]

Meanwhile Milutin Milankovitch, building on James Croll's theory, improved the tedious calculations of the varying distances and angles of the Sun's radiation as the Sun and Moon gradually perturbed the Earth's orbit. Some observations of varves (layers seen in the mud covering the bottom of lakes) matched the prediction of a Milankovitch cycle lasting about 21,000 years. However, most geologists dismissed the astronomical theory. For they could not fit Milankovitch's timing to the accepted sequence, which had only four ice ages, all of them much longer than 22,000 years.[35]

In 1938 Guy Stewart Callendar attempted to revive Arrhenius's greenhouse-effect theory. Callendar presented evidence that both temperature and the CO2 level in the atmosphere had been rising over the past half-century, and he argued that newer spectroscopic measurements showed that the gas was effective in absorbing infrared in the atmosphere. Nevertheless, most scientific opinion continued to dispute or ignore the theory.[36]

Another clue to the nature of climate change came in the mid-1960s from analysis of deep-sea cores by Cesare Emiliani and analysis of ancient corals by Wallace Broecker and collaborators. Rather than four long ice ages, they found a large number of shorter ones in a regular sequence. It appeared that the timing of ice ages was set by the small orbital shifts of the Milankovitch cycles. While the matter remained controversial, some began to suggest that the climate system is sensitive to small changes and can readily be flipped from a stable state into a different one.[35]

Scientists meanwhile began using computers to develop more sophisticated versions of Arrhenius's calculations. In 1967, taking advantage of the ability of digital computers to integrate absorption curves numerically, Syukuro Manabe and Richard Wetherald made the first detailed calculation of the greenhouse effect incorporating convection (the "Manabe-Wetherald one-dimensional radiative-convective model").[37][38] They found that, in the absence of unknown feedbacks such as changes in clouds, a doubling of carbon dioxide from the current level would result in approximately 2 °C increase in global temperature.

By the 1960s, aerosol pollution ("smog") had become a serious local problem in many cities, and some scientists began to consider whether the cooling effect of particulate pollution could affect global temperatures. Scientists were unsure whether the cooling effect of particulate pollution or warming effect of greenhouse gas emissions would predominate, but regardless, began to suspect that human emissions could be disruptive to climate in the 21st century if not sooner. In his 1968 book The Population Bomb, Paul R. Ehrlich wrote, "the greenhouse effect is being enhanced now by the greatly increased level of carbon dioxide... [this] is being countered by low-level clouds generated by contrails, dust, and other contaminants ... At the moment we cannot predict what the overall climatic results will be of our using the atmosphere as a garbage dump."[39]

A 1968 study by the Stanford Research Institute for the American Petroleum Institute noted:[40]

If the earth's temperature increases significantly, a number of events might be expected to occur, including the melting of the Antarctic ice cap, a rise in sea levels, warming of the oceans, and an increase in photosynthesis. [..] Revelle makes the point that man is now engaged in a vast geophysical experiment with his environment, the earth. Significant temperature changes are almost certain to occur by the year 2000 and these could bring about climatic changes.

In 1969, NATO was the first candidate to deal with climate change on an international level. It was planned then to establish a hub of research and initiatives of the organization in the civil area, dealing with environmental topics[41] as acid rain and the greenhouse effect. The suggestion of US President Richard Nixon was not very successful with the administration of German Chancellor Kurt Georg Kiesinger. But the topics and the preparation work done on the NATO proposal by the German authorities gained international momentum, (see e.g. the Stockholm United Nations Conference on the Human Environment 1970) as the government of Willy Brandt started to apply them on the civil sphere instead.[41][clarification needed]

Also in 1969, Mikhail Budyko published a theory on the ice-albedo feedback, a foundational element of what is today known as Arctic amplification.[42] The same year a similar model was published by William D. Sellers.[43] Both studies attracted significant attention, since they hinted at the possibility for a runaway positive feedback within the global climate system.[44]

In the early 1970s, evidence that aerosols were increasing worldwide encouraged Reid Bryson and some others to warn of the possibility of severe cooling. Meanwhile, the new evidence that the timing of ice ages was set by predictable orbital cycles suggested that the climate would gradually cool, over thousands of years. For the century ahead, however, a survey of the scientific literature from 1965 to 1979 found 7 articles predicting cooling and 44 predicting warming (many other articles on climate made no prediction); the warming articles were cited much more often in subsequent scientific literature.[45] Several scientific panels from this time period concluded that more research was needed to determine whether warming or cooling was likely, indicating that the trend in the scientific literature had not yet become a consensus.[46][47][48]

John Sawyer published the study Man-made Carbon Dioxide and the "Greenhouse" Effect in 1972.[49] He summarized the knowledge of the science at the time, the anthropogenic attribution of the carbon dioxide greenhouse gas, distribution and exponential rise, findings which still hold today. Additionally he accurately predicted the rate of global warming for the period between 1972 and 2000.[50][51]

The increase of 25% CO2 expected by the end of the century therefore corresponds to an increase of 0.6°C in the world temperature – an amount somewhat greater than the climatic variation of recent centuries. – John Sawyer, 1972

The mainstream news media at the time exaggerated the warnings of the minority who expected imminent cooling. For example, in 1975, Newsweek magazine published a story that warned of "ominous signs that the Earth's weather patterns have begun to change."[52] The article continued by stating that evidence of global cooling was so strong that meteorologists were having "a hard time keeping up with it."[52] On 23 October 2006, Newsweek issued an update stating that it had been "spectacularly wrong about the near-term future".[53]

In the first two "Reports for the Club of Rome" in 1972[54] and 1974,[55] the anthropogenic climate changes by CO2 increase as well as by waste heat were mentioned. About the latter John Holdren wrote in a study[56] cited in the 1st report, "... that global thermal pollution is hardly our most immediate environmental threat. It could prove to be the most inexorable, however, if we are fortunate enough to evade all the rest." Simple global-scale estimates[57] that recently have been actualized[58] and confirmed by more refined model calculations[59][60] show noticeable contributions from waste heat to global warming after the year 2100, if its growth rates are not strongly reduced (below the averaged 2% p.a. which occurred since 1973).

Evidence for warming accumulated. By 1975, Manabe and Wetherald had developed a three-dimensional Global climate model that gave a roughly accurate representation of the current climate. Doubling CO2 in the model's atmosphere gave a roughly 2 °C rise in global temperature.[61] Several other kinds of computer models gave similar results: it was impossible to make a model that gave something resembling the actual climate and not have the temperature rise when the CO2 concentration was increased.

The 1979 World Climate Conference (12 to 23 February) of the World Meteorological Organization concluded "it appears plausible that an increased amount of carbon dioxide in the atmosphere can contribute to a gradual warming of the lower atmosphere, especially at higher latitudes....It is possible that some effects on a regional and global scale may be detectable before the end of this century and become significant before the middle of the next century."[62]

In July 1979 the United States National Research Council published a report,[63] concluding (in part):

When it is assumed that the CO2 content of the atmosphere is doubled and statistical thermal equilibrium is achieved, the more realistic of the modeling efforts predict a global surface warming of between 2 °C and 3.5 °C, with greater increases at high latitudes. ... we have tried but have been unable to find any overlooked or underestimated physical effects that could reduce the currently estimated global warmings due to a doubling of atmospheric CO2 to negligible proportions or reverse them altogether.

By the early 1980s, the slight cooling trend from 1945 to 1975 had stopped. Aerosol pollution had decreased in many areas due to environmental legislation and changes in fuel use, and it became clear that the cooling effect from aerosols was not going to increase substantially while carbon dioxide levels were progressively increasing.

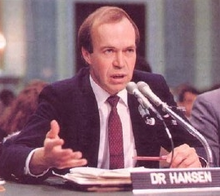

Hansen and others published the 1981 study Climate impact of increasing atmospheric carbon dioxide, and noted:

It is shown that the anthropogenic carbon dioxide warming should emerge from the noise level of natural climate variability by the end of the century, and there is a high probability of warming in the 1980s. Potential effects on climate in the 21st century include the creation of drought-prone regions in North America and central Asia as part of a shifting of climatic zones, erosion of the West Antarctic ice sheet with a consequent worldwide rise in sea level, and opening of the fabled Northwest Passage.[64]

In 1982, Greenland ice cores drilled by Hans Oeschger, Willi Dansgaard, and collaborators revealed dramatic temperature oscillations in the space of a century in the distant past.[65] The most prominent of the changes in their record corresponded to the violent Younger Dryas climate oscillation seen in shifts in types of pollen in lake beds all over Europe. Evidently drastic climate changes were possible within a human lifetime.

In 1985 a joint UNEP/WMO/ICSU Conference on the "Assessment of the Role of Carbon Dioxide and Other Greenhouse Gases in Climate Variations and Associated Impacts" concluded that greenhouse gases "are expected" to cause significant warming in the next century and that some warming is inevitable.[66]

Meanwhile, ice cores drilled by a Franco-Soviet team at the Vostok Station in Antarctica showed that CO2 and temperature had gone up and down together in wide swings through past ice ages. This confirmed the CO2-temperature relationship in a manner entirely independent of computer climate models, strongly reinforcing the emerging scientific consensus. The findings also pointed to powerful biological and geochemical feedbacks.[67]

In June 1988, James E. Hansen made one of the first assessments that human-caused warming had already measurably affected global climate.[68] Shortly after, a "World Conference on the Changing Atmosphere: Implications for Global Security" gathered hundreds of scientists and others in Toronto. They concluded that the changes in the atmosphere due to human pollution "represent a major threat to international security and are already having harmful consequences over many parts of the globe," and declared that by 2005 the world would be well-advised to push its emissions some 20% below the 1988 level.[69]

The 1980s saw important breakthroughs with regard to global environmental challenges. Ozone depletion was mitigated by the Vienna Convention (1985) and the Montreal Protocol (1987). Acid rain was mainly regulated on national and regional levels.

Colors indicate temperature anomalies (NASA/NOAA; 20 January 2016).[70] In 1988 the WMO established the Intergovernmental Panel on Climate Change with the support of the UNEP. The IPCC continues its work through the present day, and issues a series of Assessment Reports and supplemental reports that describe the state of scientific understanding at the time each report is prepared. Scientific developments during this period are summarized about once every five to six years in the IPCC Assessment Reports which were published in 1990 (First Assessment Report), 1995 (Second Assessment Report), 2001 (Third Assessment Report), 2007 (Fourth Assessment Report), and 2013/2014 (Fifth Assessment Report).[71]

Since the 1990s, research on climate change has expanded and grown, linking many fields such as atmospheric sciences, numerical modeling, behavioral sciences, geology and economics, or security.

Engineering and technology

[edit]

One of the prominent traits of the 20th century was the dramatic growth of technology. Organized research and practice of science led to advancement in the fields of communication, engineering, travel, medicine, and war.

- The number and types of home appliances increased dramatically due to advancements in technology, electricity availability, and increases in wealth and leisure time. Such basic appliances as washing machines, clothes dryers, furnaces, exercise machines, refrigerators, freezers, electric stoves, and vacuum cleaners all became popular from the 1920s through the 1950s. The microwave oven was built on 25 October 1955 and became popular during the 1980s and have become a standard in all homes by the 1990s. Radios were popularized as a form of entertainment during the 1920s, which extended to television during the 1950s. Cable and satellite television spread rapidly during the 1980s and 1990s. Personal computers began to enter the home during the 1970s–1980s as well. The age of the portable music player grew during the 1960s with the development of the transistor radio, 8-track and cassette tapes, which slowly began to replace record players These were in turn replaced by the CD during the late 1980s and 1990s. The proliferation of the Internet in the mid-to-late 1990s made digital distribution of music (mp3s) possible. VCRs were popularized in the 1970s, but by the end of the 20th century, DVD players were beginning to replace them, making the VHS obsolete by the end of the first decade of the 21st century.

- The first airplane was flown in 1903. With the engineering of the faster jet engine in the 1940s, mass air travel became commercially viable.

- The assembly line made mass production of the automobile viable. By the end of the 20th century, billions of people had automobiles for personal transportation. The combination of the automobile, motor boats and air travel allowed for unprecedented personal mobility. In western nations, motor vehicle accidents became the greatest cause of death for young people. However, expansion of divided highways reduced the death rate.

- The triode tube, transistor and integrated circuit successively revolutionized electronics and computers, leading to the proliferation of the personal computer in the 1980s and cell phones and the public-use Internet in the 1990s.

- New materials, most notably stainless steel, Velcro, silicone, teflon, and plastics such as polystyrene, PVC, polyethylene, and nylon came into widespread use for many various applications. These materials typically have tremendous performance gains in strength, temperature, chemical resistance, or mechanical properties over those known prior to the 20th century.

- Aluminium became an inexpensive metal and became second only to iron in use.

- Semiconductor materials were discovered, and methods of production and purification developed for use in electronic devices. Silicon became one of the purest substances ever produced.

- Thousands of chemicals were developed for industrial processing and home use.

Mathematics

[edit]The 20th century saw mathematics become a major profession. As in most areas of study, the explosion of knowledge in the scientific age has led to specialization: by the end of the century there were hundreds of specialized areas in mathematics and the Mathematics Subject Classification was dozens of pages long.[72] Every year, thousands of new Ph.D.s in mathematics were awarded, and jobs were available in both teaching and industry. More and more mathematical journals were published and, by the end of the century, the development of the World Wide Web led to online publishing. Mathematical collaborations of unprecedented size and scope took place. An example is the classification of finite simple groups (also called the "enormous theorem"), whose proof between 1955 and 1983 required 500-odd journal articles by about 100 authors, and filling tens of thousands of pages.

In a 1900 speech to the International Congress of Mathematicians, David Hilbert set out a list of 23 unsolved problems in mathematics. These problems, spanning many areas of mathematics, formed a central focus for much of 20th-century mathematics. Today, 10 have been solved, 7 are partially solved, and 2 are still open. The remaining 4 are too loosely formulated to be stated as solved or not.

In 1929 and 1930, it was proved the truth or falsity of all statements formulated about the natural numbers plus one of addition and multiplication, was decidable, i.e. could be determined by some algorithm. In 1931, Kurt Gödel found that this was not the case for the natural numbers plus both addition and multiplication; this system, known as Peano arithmetic, was in fact incompletable. (Peano arithmetic is adequate for a good deal of number theory, including the notion of prime number.) A consequence of Gödel's two incompleteness theorems is that in any mathematical system that includes Peano arithmetic (including all of analysis and geometry), truth necessarily outruns proof, i.e. there are true statements that cannot be proved within the system. Hence mathematics cannot be reduced to mathematical logic, and David Hilbert's dream of making all of mathematics complete and consistent needed to be reformulated.

In 1963, Paul Cohen proved that the continuum hypothesis is independent of (could neither be proved nor disproved from) the standard axioms of set theory. In 1976, Wolfgang Haken and Kenneth Appel used a computer to prove the four color theorem. Andrew Wiles, building on the work of others, proved Fermat's Last Theorem in 1995. In 1998 Thomas Callister Hales proved the Kepler conjecture.

Differential geometry came into its own when Albert Einstein used it in general relativity. Entirely new areas of mathematics such as mathematical logic, topology, and John von Neumann's game theory changed the kinds of questions that could be answered by mathematical methods. All kinds of structures were abstracted using axioms and given names like metric spaces, topological spaces etc. As mathematicians do, the concept of an abstract structure was itself abstracted and led to category theory. Grothendieck and Serre recast algebraic geometry using sheaf theory. Large advances were made in the qualitative study of dynamical systems that Poincaré had begun in the 1890s. Measure theory was developed in the late 19th and early 20th centuries. Applications of measures include the Lebesgue integral, Kolmogorov's axiomatisation of probability theory, and ergodic theory. Knot theory greatly expanded. Quantum mechanics led to the development of functional analysis. Other new areas include Laurent Schwartz's distribution theory, fixed point theory, singularity theory and René Thom's catastrophe theory, model theory, and Mandelbrot's fractals. Lie theory with its Lie groups and Lie algebras became one of the major areas of study.

Non-standard analysis, introduced by Abraham Robinson, rehabilitated the infinitesimal approach to calculus, which had fallen into disrepute in favour of the theory of limits, by extending the field of real numbers to the Hyperreal numbers which include infinitesimal and infinite quantities. An even larger number system, the surreal numbers were discovered by John Horton Conway in connection with combinatorial games.

The development and continual improvement of computers, at first mechanical analog machines and then digital electronic machines, allowed industry to deal with larger and larger amounts of data to facilitate mass production and distribution and communication, and new areas of mathematics were developed to deal with this: Alan Turing's computability theory; complexity theory; Derrick Henry Lehmer's use of ENIAC to further number theory and the Lucas-Lehmer test; Rózsa Péter's recursive function theory; Claude Shannon's information theory; signal processing; data analysis; optimization and other areas of operations research. In the preceding centuries much mathematical focus was on calculus and continuous functions, but the rise of computing and communication networks led to an increasing importance of discrete concepts and the expansion of combinatorics including graph theory. The speed and data processing abilities of computers also enabled the handling of mathematical problems that were too time-consuming to deal with by pencil and paper calculations, leading to areas such as numerical analysis and symbolic computation. Some of the most important methods and algorithms of the 20th century are: the simplex algorithm, the fast Fourier transform, error-correcting codes, the Kalman filter from control theory and the RSA algorithm of public-key cryptography.

Physics

[edit]- New areas of physics, like special relativity, general relativity, and quantum mechanics, were developed during the first half of the century. In the process, the internal structure of atoms came to be clearly understood, followed by the discovery of elementary particles.

- It was found that all the known forces can be traced to only four fundamental interactions. It was discovered further that two forces, electromagnetism and weak interaction, can be merged in the electroweak interaction, leaving only three different fundamental interactions.

- Discovery of nuclear reactions, in particular nuclear fusion, finally revealed the source of solar energy.

- Radiocarbon dating was invented, and became a powerful technique for determining the age of prehistoric animals and plants as well as historical objects.

- Stellar nucleosynthesis was refined as a theory in 1954 by Fred Hoyle; the theory was supported by astronomical evidence that showed chemical elements were created by nuclear fusion reactions within stars.

Quantum mechanics

[edit]  | |

| |

| From left to right, top row: Louis de Broglie (1892–1987) and Wolfgang Pauli (1900–58); second row: Erwin Schrödinger (1887–1961) and Werner Heisenberg (1901–76) |

In 1924, French quantum physicist Louis de Broglie published his thesis, in which he introduced a revolutionary theory of electron waves based on wave–particle duality. In his time, the wave and particle interpretations of light and matter were seen as being at odds with one another, but de Broglie suggested that these seemingly different characteristics were instead the same behavior observed from different perspectives—that particles can behave like waves, and waves (radiation) can behave like particles. Broglie's proposal offered an explanation of the restricted motion of electrons within the atom. The first publications of Broglie's idea of "matter waves" had drawn little attention from other physicists, but a copy of his doctoral thesis chanced to reach Einstein, whose response was enthusiastic. Einstein stressed the importance of Broglie's work both explicitly and by building further on it.

In 1925, Austrian-born physicist Wolfgang Pauli developed the Pauli exclusion principle, which states that no two electrons around a single nucleus in an atom can occupy the same quantum state simultaneously, as described by four quantum numbers. Pauli made major contributions to quantum mechanics and quantum field theory – he was awarded the 1945 Nobel Prize for Physics for his discovery of the Pauli exclusion principle – as well as solid-state physics, and he successfully hypothesized the existence of the neutrino. In addition to his original work, he wrote masterful syntheses of several areas of physical theory that are considered classics of scientific literature.

In 1926 at the age of 39, Austrian theoretical physicist Erwin Schrödinger produced the papers that gave the foundations of quantum wave mechanics. In those papers he described his partial differential equation that is the basic equation of quantum mechanics and bears the same relation to the mechanics of the atom as Newton's equations of motion bear to planetary astronomy. Adopting a proposal made by Louis de Broglie in 1924 that particles of matter have a dual nature and in some situations act like waves, Schrödinger introduced a theory describing the behaviour of such a system by a wave equation that is now known as the Schrödinger equation. The solutions to Schrödinger's equation, unlike the solutions to Newton's equations, are wave functions that can only be related to the probable occurrence of physical events. The readily visualized sequence of events of the planetary orbits of Newton is, in quantum mechanics, replaced by the more abstract notion of probability. (This aspect of the quantum theory made Schrödinger and several other physicists profoundly unhappy, and he devoted much of his later life to formulating philosophical objections to the generally accepted interpretation of the theory that he had done so much to create.)

German theoretical physicist Werner Heisenberg was one of the key creators of quantum mechanics. In 1925, Heisenberg discovered a way to formulate quantum mechanics in terms of matrices. For that discovery, he was awarded the Nobel Prize for Physics for 1932. In 1927 he published his uncertainty principle, upon which he built his philosophy and for which he is best known. Heisenberg was able to demonstrate that if you were studying an electron in an atom you could say where it was (the electron's location) or where it was going (the electron's velocity), but it was impossible to express both at the same time. He also made important contributions to the theories of the hydrodynamics of turbulent flows, the atomic nucleus, ferromagnetism, cosmic rays, and subatomic particles, and he was instrumental in planning the first West German nuclear reactor at Karlsruhe, together with a research reactor in Munich, in 1957. Considerable controversy surrounds his work on atomic research during World War II.Social sciences

[edit]- Ivan Pavlov developed the theory of classical conditioning.

- The Austrian School of economic theory gained in prominence.

References

[edit]- ^ Agar, Jon (2012). Science in the Twentieth Century and Beyond. Cambridge: Polity Press. ISBN 978-0-7456-3469-2.

- ^ Thomson, Sir William (1862). "On the Age of the Sun's Heat". Macmillan's Magazine. 5: 288–293.

- ^ a b c "The Nobel Prize in Physiology or Medicine 1962". NobelPrize.org. Nobel Media AB. Retrieved November 5, 2011.

- ^ a b c d "James Watson, Francis Crick, Maurice Wilkins, and Rosalind Franklin". Science History Institute. June 2016. Archived from the original on 21 March 2018. Retrieved 20 March 2018.

- ^ W. Heitler and F. London, Wechselwirkung neutraler Atome und Homöopolare Bindung nach der Quantenmechanik, Z. Physik, 44, 455 (1927).

- ^ P.A.M. Dirac, Quantum Mechanics of Many-Electron Systems, Proc. R. Soc. London, A 123, 714 (1929).

- ^ C.C.J. Roothaan, A Study of Two-Center Integrals Useful in Calculations on Molecular Structure, J. Chem. Phys., 19, 1445 (1951).

- ^ Watson, J. and Crick, F., "Molecular Structure of Nucleic Acids" Nature, April 25, 1953, p 737–8

- ^ The Miller Urey Experiment – Windows to the Universe

- ^ W. J. Hehre, W. A. Lathan, R. Ditchfield, M. D. Newton, and J. A. Pople, Gaussian 70 (Quantum Chemistry Program Exchange, Program No. 237, 1970).

- ^ Catalyse de transformation des oléfines par les complexes du tungstène. II. Télomérisation des oléfines cycliques en présence d'oléfines acycliques Die Makromolekulare Chemie Volume 141, Issue 1, Date: 9 February 1971, Pages: 161–176 Par Jean-Louis Hérisson, Yves Chauvin doi:10.1002/macp.1971.021410112

- ^ Katsuki, T.; Sharpless, K. B. J. Am. Chem. Soc. 1980, 102, 5974. (doi:10.1021/ja00538a077)

- ^ Hill, J. G.; Sharpless, K. B.; Exon, C. M.; Regenye, R. Org. Synth., Coll. Vol. 7, p.461 (1990); Vol. 63, p.66 (1985). (Article)

- ^ Jacobsen, E. N.; Marko, I.; Mungall, W. S.; Schroeder, G.; Sharpless, K. B. J. Am. Chem. Soc. 1988, 110, 1968. (doi:10.1021/ja00214a053)

- ^ Kolb, H. C.; Van Nieuwenhze, M. S.; Sharpless, K. B. Chem. Rev. 1994, 94, 2483–2547. (Review) (doi:10.1021/cr00032a009)

- ^ Gonzalez, J.; Aurigemma, C.; Truesdale, L. Org. Synth., Coll. Vol. 10, p.603 (2004); Vol. 79, p.93 (2002). (Article Archived 2010-08-24 at the Wayback Machine)

- ^ Sharpless, K. B.; Patrick, D. W.; Truesdale, L. K.; Biller, S. A. J. Am. Chem. Soc. 1975, 97, 2305. (doi:10.1021/ja00841a071)

- ^ Herranz, E.; Biller, S. A.; Sharpless, K. B. J. Am. Chem. Soc. 1978, 100, 3596–3598. (doi:10.1021/ja00479a051)

- ^ Herranz, E.; Sharpless, K. B. Org. Synth., Coll. Vol. 7, p.375 (1990); Vol. 61, p.85 (1983). (Article Archived 2012-10-20 at the Wayback Machine)

- ^ "The Nobel Prize in Chemistry 1996". Nobelprize.org. The Nobel Foundation. Retrieved 2007-02-28.

- ^ "Benjamin Franklin Medal awarded to Dr. Sumio Iijima, Director of the Research Center for Advanced Carbon Materials, AIST". National Institute of Advanced Industrial Science and Technology. 2002. Archived from the original on 2007-04-04. Retrieved 2007-03-27.

- ^ First total synthesis of taxol 1. Functionalization of the B ring Robert A. Holton, Carmen Somoza, Hyeong Baik Kim, Feng Liang, Ronald J. Biediger, P. Douglas Boatman, Mitsuru Shindo, Chase C. Smith, Soekchan Kim, et al.; J. Am. Chem. Soc.; 1994; 116(4); 1597–1598. DOI Abstract

- ^ First total synthesis of taxol. 2. Completion of the C and D rings Robert A. Holton, Hyeong Baik Kim, Carmen Somoza, Feng Liang, Ronald J. Biediger, P. Douglas Boatman, Mitsuru Shindo, Chase C. Smith, Soekchan Kim, and et al. J. Am. Chem. Soc.; 1994; 116(4) pp 1599–1600 DOI Abstract

- ^ A synthesis of taxusin Robert A. Holton, R. R. Juo, Hyeong B. Kim, Andrew D. Williams, Shinya Harusawa, Richard E. Lowenthal, Sadamu Yogai J. Am. Chem. Soc.; 1988; 110(19); 6558–6560. Abstract

- ^ "Cornell and Wieman Share 2001 Nobel Prize in Physics". NIST News Release. National Institute of Standards and Technology. 2001. Archived from the original on 2007-06-10. Retrieved 2007-03-27.

- ^ Wegener, Alfred (1912). "Die Herausbildung der Grossformen der Erdrinde (Kontinente und Ozeane), auf geophysikalischer Grundlage" (PDF)". Petermanns Geographische Mitteilungen. 63: 185–95, 253–56, 305–09.

- ^ Holmes, Arthur (1931). "Radioactivity and Earth Movements" (PDF). Transactions of the Geological Society of Glasgow. 18 (3). Geological Society of Glasgow: 559–606. doi:10.1144/transglas.18.3.559. S2CID 122872384.

- ^ Hess, H. H. (1 November 1962). "History of Ocean Basins" (PDF). In A. E. J. Engel; Harold L. James; B. F. Leonard (eds.). Petrologic studies: a volume in honor of A. F. Buddington. Boulder, CO: Geological Society of America. pp. 599–620.

- ^ Wilson, J.T> (1963). "Hypothesis on the Earth's behaviour". Nature. 198 (4884): 849–65. Bibcode:1963Natur.198..849H. doi:10.1038/198849a0. S2CID 4209203.

- ^ Wilson, J. Tuzo (1965). "A new class of faults and their bearing on continental drift". Nature. 207 (4995): 343–47. Bibcode:1965Natur.207..343W. doi:10.1038/207343a0. S2CID 4294401.

- ^ Blacket, P.M.S.; Bullard, E.; Runcorn, S.K., eds. (1965). "A Symposium on Continental Drift, held on 28 October 1965". Philosophical Transactions of the Royal Society A. 258 (1088). Royal Society of London.

- ^ a b Spencer Weart (2011). "Changing Sun, Changing Climate". The Discovery of Global Warming.

- ^ a b Hufbauer, K. (1991). Exploring the Sun: Solar Science since Galileo. Baltimore, MD: Johns Hopkins University Press.

- ^ Lamb, Hubert H. (1997). Through All the Changing Scenes of Life: A Meteorologist's Tale. Norfolk, UK: Taverner. pp. 192–193. ISBN 1-901470-02-4.

- ^ a b Spencer Weart (2011). "Past Climate Cycles: Ice Age Speculations". The Discovery of Global Warming.

- ^ Fleming, James R. (2007). The Callendar Effect. The Life and Work of Guy Stewart Callendar (1898–1964), the Scientist Who Established the Carbon Dioxide Theory of Climate Change. Boston, MA: American Meteorological Society. ISBN 978-1878220769.

- ^ Spencer Weart (2011). "General Circulation Models of Climate". The Discovery of Global Warming.

- ^ Manabe S.; Wetherald R. T. (1967). "Thermal Equilibrium of the Atmosphere with a Given Distribution of Relative Humidity". Journal of the Atmospheric Sciences. 24 (3): 241–259. Bibcode:1967JAtS...24..241M. doi:10.1175/1520-0469(1967)024<0241:teotaw>2.0.co;2.

- ^ Ehrlich, Paul R. (1968). The Population Bomb. San Francisco: Sierra Club. p. 52.

- ^ E. Robinson; R.C. Robbins (1968). "Smoke & Fumes, Sources, Abundance & Fate of Atmospheric Pollutants". Stanford Research Institute.

- ^ a b Die Frühgeschichte der globalen Umweltkrise und die Formierung der deutschen Umweltpolitik(1950–1973) (Early history of the environmental crisis and the setup of German environmental policy 1950–1973), Kai F. Hünemörder, Franz Steiner Verlag, 2004 ISBN 3-515-08188-7

- ^ "Ice in Action: Sea ice at the North Pole has something to say about climate change". YaleScientific. 2016.

- ^ William D. Sellers (1969). "A Global Climatic Model Based on the Energy Balance of the Earth-Atmosphere System". Journal of Applied Meteorology. 8 (3): 392–400. Bibcode:1969JApMe...8..392S. doi:10.1175/1520-0450(1969)008<0392:AGCMBO>2.0.CO;2.

- ^ Jonathan D. Oldfield (2016). "Mikhail Budyko's (1920–2001) contributions to Global Climate Science: from heat balances to climate change and global ecology". Advanced Review. 7 (5): 682–692. doi:10.1002/wcc.412.

- ^ Peterson, T.C.; W.M. Connolley; J. Fleck (2008). "The Myth of the 1970s Global Cooling Scientific Consensus" (PDF). Bulletin of the American Meteorological Society. 89 (9): 1325–1337. Bibcode:2008BAMS...89.1325P. doi:10.1175/2008BAMS2370.1. S2CID 123635044.

- ^ Science and the Challenges Ahead. Report of the National Science Board. Washington, D.C. : National Science Board, National Science Foundation. 1974.

- ^ W M Connolley. "The 1975 US National Academy of Sciences/National Research Council Report". Retrieved 28 June 2009.

- ^ Reid A. Bryson:A Reconciliation of several Theories of Climate Change, in: John P. Holdren (Ed.): Global Ecology. Readings toward a Rational Strategy for Man, New York etc. 1971, S. 78–84

- ^ J. S. Sawyer (1 September 1972). "Man-made Carbon Dioxide and the "Greenhouse" Effect". Nature. 239 (5366): 23–26. Bibcode:1972Natur.239...23S. doi:10.1038/239023a0. S2CID 4180899.

- ^ Neville Nicholls (30 August 2007). "Climate: Sawyer predicted rate of warming in 1972". Nature. 448 (7157): 992. Bibcode:2007Natur.448..992N. doi:10.1038/448992c. PMID 17728736.

- ^ Dana Andrew Nuccitelli (3 March 2015). Climatology versus Pseudoscience: Exposing the Failed Predictions of Global Warming Skeptics. Nature. pp. 22–25. ISBN 9781440832024.

- ^ a b Peter Gwynne (1975). "The Cooling World" (PDF). Archived from the original (PDF) on 2013-04-20. Retrieved 2020-06-17.

- ^ Jerry Adler (23 October 2006). "Climate Change: Prediction Perils". Newsweek.

- ^ Meadows, D., et al., The Limits to Growth. New York 1972.

- ^ Mesarovic, M., Pestel, E., Mankind at the Turning Point. New York 1974.

- ^ John P. Holdren: "Global Thermal Pollution", in: John P. Holdren (Ed.): Global Ecology. Readings toward a Rational Strategy for Man, New York etc. 1971, S. 85–88. The author became Director of the White House Office of Science and Technology Policy in 2009.

- ^ R. Döpel, "Über die geophysikalische Schranke der industriellen Energieerzeugung." Wissenschaftl. Zeitschrift der Technischen Hochschule Ilmenau, ISSN 0043-6917, Bd. 19 (1973, H.2), 37–52. online.

- ^ H. Arnold, "Robert Döpel and his Model of Global Warming. An Early Warning – and its Update." Universitätsverlag Ilmenau (Germany) 2013. ISBN 978-3-86360 063-1 online

- ^ Chaisson E. J. (2008). "Long-Term Global Heating from Energy Usage". Eos. 89 (28): 253–260. Bibcode:2008EOSTr..89..253C. doi:10.1029/2008eo280001.

- ^ Flanner, M. G. (2009). "Integrating anthropogenic heat flux with global climate models". Geophys. Res. Lett. 36 (2): L02801. Bibcode:2009GeoRL..36.2801F. doi:10.1029/2008GL036465.

- ^ Manabe S.; Wetherald R. T. (1975). "The Effects of Doubling the CO2 Concentration on the Climate of a General Circulation Model". Journal of the Atmospheric Sciences. 32 (3): 3–15. Bibcode:1975JAtS...32....3M. doi:10.1175/1520-0469(1975)032<0003:teodtc>2.0.co;2.

- ^ "Declaration of the World Climate Conference" (PDF). World Meteorological Organization. Retrieved 28 June 2009.

- ^ National Research Council (1979). Carbon Dioxide and Climate:A Scientific Assessment. Washington, D.C.: The National Academies Press. doi:10.17226/12181. ISBN 978-0-309-11910-8.

- ^ Hansen, J.; et al. (1981). "Climate impact of increasing atmospheric carbon dioxide". Science. 231 (4511): 957–966. Bibcode:1981Sci...213..957H. doi:10.1126/science.213.4511.957. PMID 17789014. S2CID 20971423.

- ^ Dansgaard W.; et al. (1982). "A New Greenland Deep Ice Core". Science. 218 (4579): 1273–77. Bibcode:1982Sci...218.1273D. doi:10.1126/science.218.4579.1273. PMID 17770148. S2CID 35224174.

- ^ World Meteorological Organisation (WMO) (1986). "Report of the International Conference on the assessment of the role of carbon dioxide and of other greenhouse gases in climate variations and associated impacts". Villach, Austria. Archived from the original on 21 November 2013. Retrieved 28 June 2009.

- ^ Lorius Claude; et al. (1985). "A 150,000-Year Climatic Record from Antarctic Ice". Nature. 316 (6029): 591–596. Bibcode:1985Natur.316..591L. doi:10.1038/316591a0. S2CID 4368173.

- ^ "Statement of Dr. James Hansen, Director, NASA Goddard Institute for Space Studies" (PDF). The Guardian. London. Retrieved 28 June 2009.

- ^ WMO (World Meteorological Organization) (1989). The Changing Atmosphere: Implications for Global Security, Toronto, Canada, 27–30 June 1988: Conference Proceedings (PDF). Geneva: Secretariat of the World Meteorological Organization. Archived from the original (PDF) on 2012-06-29.

- ^ Brown, Dwayne; Cabbage, Michael; McCarthy, Leslie; Norton, Karen (20 January 2016). "NASA, NOAA Analyses Reveal Record-Shattering Global Warm Temperatures in 2015". NASA. Retrieved 21 January 2016.

- ^ "IPCC – Intergovernmental Panel on Climate Change". ipcc.ch.

- ^ Mathematics Subject Classification 2000